DEJAN: RexBERT for E-Commerce SEO Tasks

- th3s3rp4nt

- 22. Sept. 2025

- 1 Min. Lesezeit

Key Takeaways:

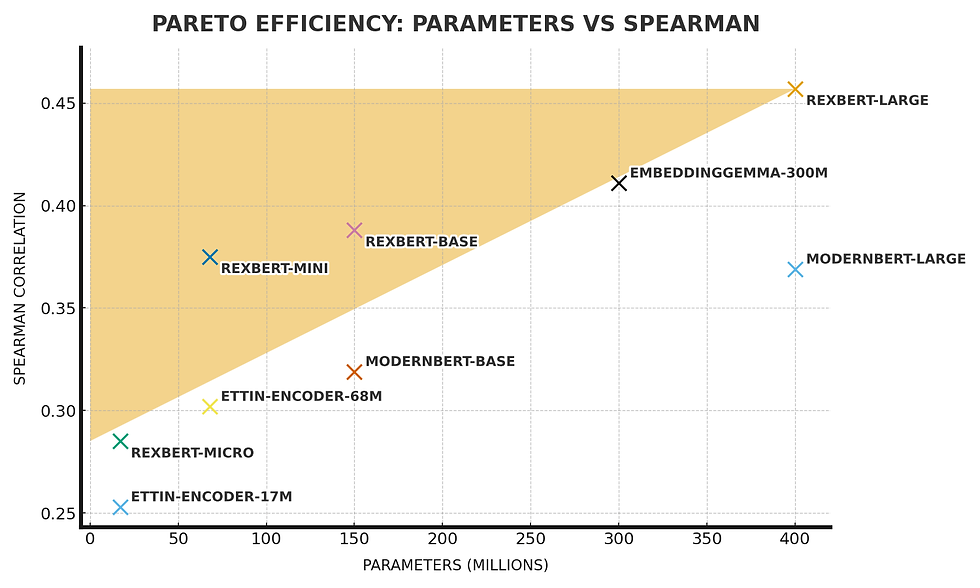

RexBERT is a language model specifically trained on E-Commerce data that outperforms general-purpose models

Potential tasks according to Dan Petrovic:

Smarter Product Title & Description Audits Detects missing attributes, redundant phrasing, or keyword gaps across large catalogs

Attribute Extraction at Scale

Turns messy descriptions into structured filters (size, color, material, brand) for cleaner navigation and richer schema

Semantic Internal Linking

Powers smarter product & category linking by understanding synonyms, substitutes, and complements

Duplicate Content Detection

Flags near-duplicates across thousands of SKUs—guiding canonicalization, consolidation, or rewrites

Meta Description & Snippet Simulation

Predicts how search snippets might render, letting you A/B test before pushing changes live

Category Page Relevance

Classifies content against category intent (“men’s trail running shoes” vs “generic running shoes”) to strengthen topical depth

Enhanced On-Site Search

Improves query-to-product matching, reducing zero-result pages and increasing conversion.

"TL;DR: An encoder-only transformer (ModernBERT-style) for e-commerce applications, trained in three phases—Pre-training, Context Extension, and Decay—to power product search, attribute extraction, classification, and embeddings use cases. The model has been trained on 2.3T+ tokens along with 350B+ e-commerce-specific tokens"

Sources: